|

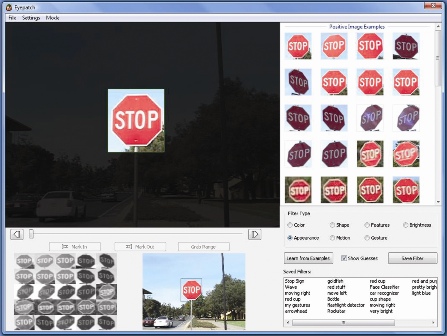

Eyepatch is a rapid prototyping tool designed to simplify the process of developing computer vision applications. Eyepatch allows novice programmers to extract useful data from live video and stream that data to other rapid prototyping tools, such as Adobe Flash, d.tools, and Microsoft Visual Basic. Eyepatch has two basic modes:

Eyepatch allows designers to create, test, and refine their own customized classifiers, without writing any specialized code. Its diversity of classification strategies makes it adaptable to a wide variety of applications, and the fluidity of its classifier training interface illustrates how interactive machine learning can allow designers to tailor a recognition algorithm to their application without any specialized knowledge of computer vision.

|

|

|

Maynes-Aminzade, D., T. Winograd, and T. Igarashi. Eyepatch:

Prototyping Camera-based Interaction through Examples. UIST: ACM

|

|

|

Video: UIST Eyepatch Overview (WMV, 24MB)

|

|

|

Eyepatch Installer for Windows (25 MB) |

|

|

Dan Maynes-Aminzade

|

|

|

Dan Maynes-Aminzade (monzy at stanford dot edu) |